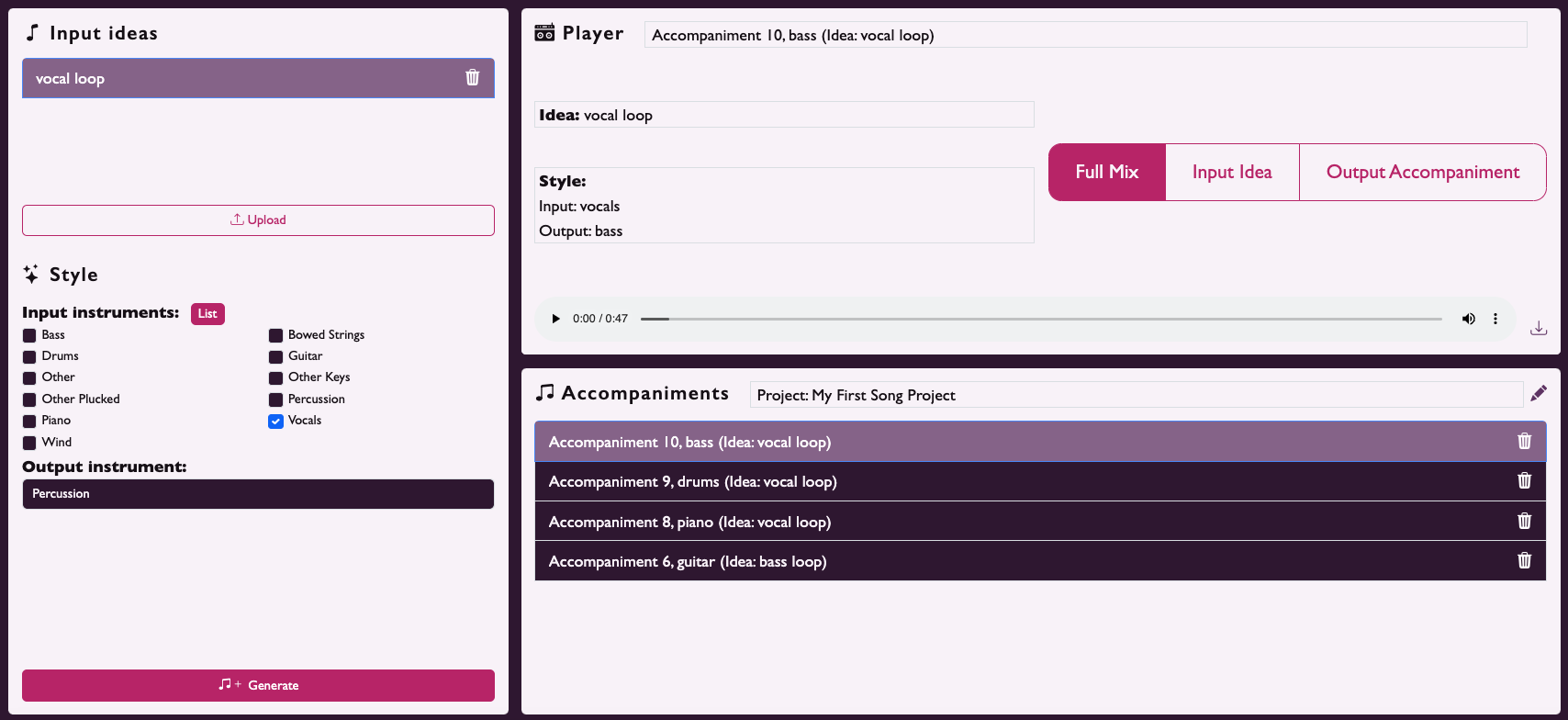

Define your inputs and vision

Upload your core ideas—audio clips, reference tracks, or written descriptions. Specify instruments, sounds, and styles to shape generations to your liking.

AçaiMusic's artist-centric AI generates loops and sounds that just fit — tailored to the style, instruments, key, tempo, and vibe of your project.

Try now

Upload your core ideas—audio clips, reference tracks, or written descriptions. Specify instruments, sounds, and styles to shape generations to your liking.

Instantly generate musical parts that fit—harmonically, rhythmically, and stylistically. Browse variations, layer ideas, and save what works.

Make detailed edits or regenerate parts until everything clicks. Download in studio-quality file formats, ready for your DAW.

Instantly generate and audition loops, sounds, and instrument parts adapted to your track's key, tempo, and vibe—no manual filtering required.

Spark new ideas fast with studio-quality audio parts, ready to drop straight into your project—loops, instruments, and textures that inspire.

Custom-tailor the instruments and characteristics of your sounds—no more muting or editing out unwanted elements in pre-made loops.

Keep your product fresh—let users generate loops, instrument parts, samples, and accompaniments based on content already in your ecosystem.

Let your users generate new sounds inspired by their favorite tracks or artists—all within your platform.

Our API is simple to integrate and built to save your team time—delivering high performance without the development overhead.

Thanks to our partnerships with rightsholders, we ensure proper compensation for creators—ethically sourced, unlike other models.

Our AI lets you add specific instruments or layers without unwanted artefacts or unexpected changes elsewhere in your track.

AçaiMusic's models understand musical context, enabling smoother integration into your projects than traditional generative tools.

Michele holds a Bachelor's in Computer Science and a Master's in Cognitive Neuroscience from the University of Trento. After his research thesis in collaboration with UniTN, CIMeC, and VU Amsterdam, he joined the Technology Innovation Institute in Abu Dhabi as an Artificial Intelligence Engineer within the Extreme Scale LLM Team, where he worked on the development of several Large Language Models including state-of-the-art open-source model Falcon 180B and Noor. He focused on model evaluation, building reliable and efficient distributed code, managing model checkpoints for inference, and fine-tuning models for alignment using reinforcement learning with human feedback and direct preference optimisation.

Michele has been writing poems and playing guitar since he was a child, which made songwriting a natural next step for him. In the last few years he has published and produced songs in recording studios around the world, which has led him to experience first-hand the aspects and process of an emerging artist's life.

Marco worked as a PhD researcher within the Centre for Digital Music at Queen Mary University of London where, as a member of the UKRI Centre for Doctoral Training in AI and Music, he researched and developed AI methods for Audio Effects Modelling and Neural Synthesis of Sound Effects. He also worked as a research assistant in the Audio Experience Design Lab at Imperial College London on 3D audio and headphones acoustics. He holds a BEng in Computer Engineering and an MEng in Electronic Engineering from Sapienza University of Rome, as well as an MSc in Sound and Music Computing from Queen Mary University of London.

Marco has a past as a guitar amplifiers designer and project engineer for Blackstar Amplification, where he had fun with high-voltage valve amps, low-voltage amps and pedals, as well as helping to model analogue circuits. Previously, he designed and hand-built overdrive and distortion pedals for his own small company, Mad Hatter Stompboxes.

David holds a Bachelor's degree in Electrical and Electronic Engineering from Imperial College. After working as a credit derivatives trader at Goldman Sachs, he joined AI Music's R&D team, where he built AI systems for music and audio tagging and recommendation. He deepened his knowledge of music technology and the music industry while managing Universal Music Group's REDD incubator at Abbey Road. Most recently, he was the lead product manager at the music creator tools startup DAACI, where he led the launch of a generative drums co-pilot tool.

David began playing violin at age 5 and joined Italy's first youth symphonic orchestra at 11. Classically trained at Rome's conservatoire, he holds an ABRSM diploma in violin performance and a CertHE in Music and Sound Recording from the University of Surrey. Since his teens, he has composed music using technology, gaining broad experience in music creation and production.

Supported by